Hi, I’m Pratinav Seth! 👋

I am a Research Scientist at Lexsi Labs, where I focus on building responsible AI systems through advancements in Tabular Foundation Models, AI alignment, and interpretability guided safety. I currently oversee a team of four full-time researchers, driving R&D initiatives that leverage interpretability as a core design principle to enhance model reliability and transparency.

Prior to Lexsi Labs, I completed my B.Tech in Data Science from Manipal Institute of Technology and conducted research at Mila Quebec AI Institute (under Dr. David Rolnick), Bosch Research India, and IIT Kharagpur. I am honored to be an AAAI Undergraduate Consortium Scholar and am passionate about applying AI for social good, particularly in medical imagery and remote sensing.

I’m always eager to connect—feel free to reach out or check out my Resume for more details. 🚀

🚀 Key Highlights

- 📚 24+ Peer-Reviewed Publications (9 Main Conference + 15 Workshop) at top-tier venues including ICML, ACL, WWW, NeurIPS, CVPR, and AAAI.

- 🏆 AAAI Undergraduate Consortium Scholar & Mentor (2023, 2026).

🔥 News

Recent Publications & Acceptances

- 2026.01: 🎉 Orion-Bix: Bi-Axial Attention for Tabular In-Context Learning accepted at WWW 2026!

- 2026.01: 🎉 Exploring Fine-Tuning for Tabular Foundation Models accepted at WWW 2026!

- 2026.01: 🎉 TabTune: A Unified Library for Inference and Fine-Tuning Tabular Foundation Models (Demo) accepted at WWW 2026!

- 2026.01: 🎉 Laplacian reconstructive network for guided thermal super-resolution accepted at Scientific Reports (Nature)!

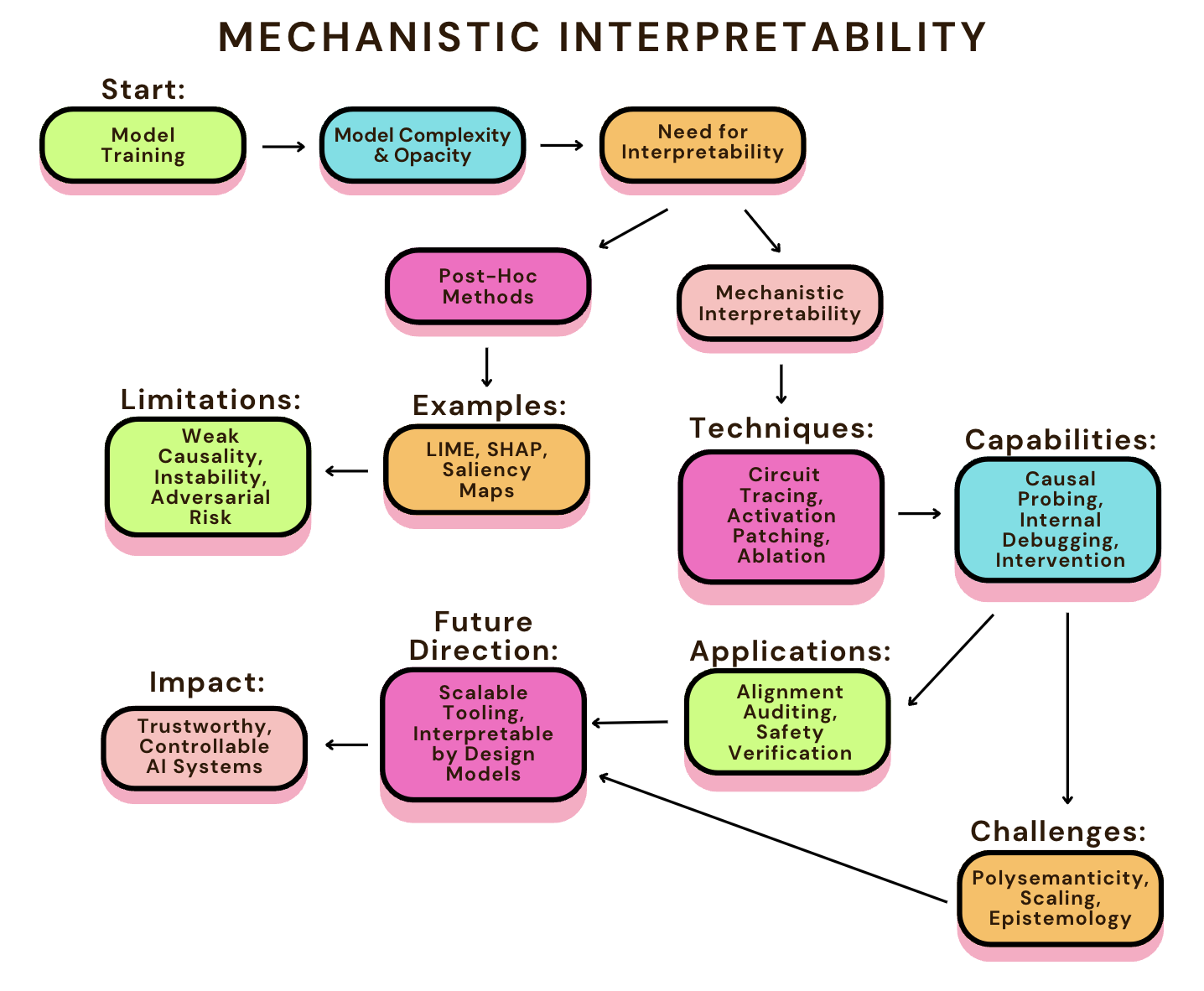

- 2025.11: 🎉 Interpretability as Alignment: Making Internal Understanding a Design Principle accepted at EurIPS Workshop on Private AI Governance!

- 2025.11: 🎉 Bridging the gap in XAI-why reliable metrics matter for explainability and compliance accepted at EurIPS Workshop on Private AI Governance!

- 2025.11: 🎉 EurIPS Workshop on Private AI Governance 2025 Spotlight Talk !

- 2025.09: 🎉 Interpretability-aware pruning for efficient medical image analysis accepted at MICCAI Workshop 2025!

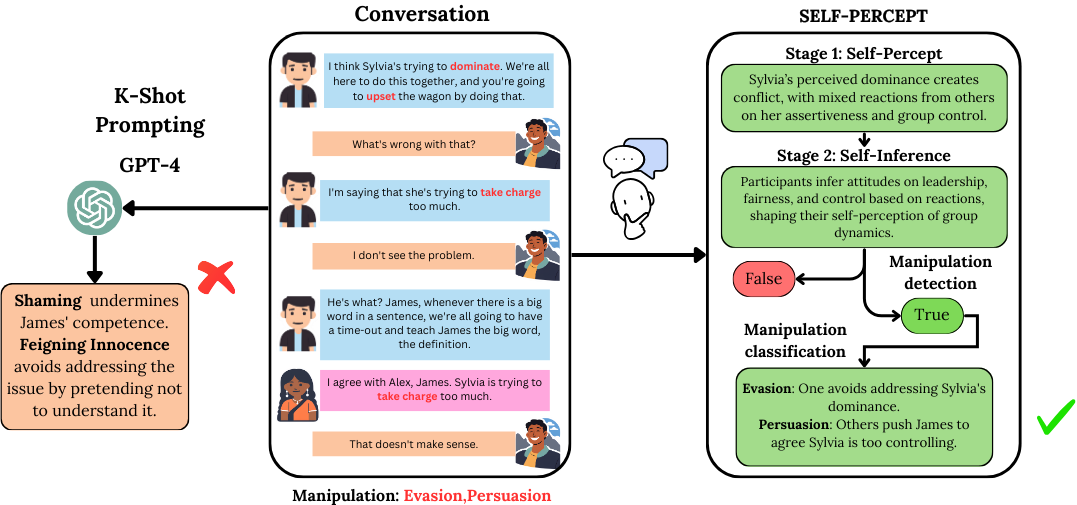

- 2025.05: 🎉 SELF-PERCEPT: Mental Manipulation Detection accepted at ACL 2025!

- 2025.05: 🎉 Alberta Wells Dataset accepted at ICML 2025! (Really Grateful to the Team for their efforts and Prof. David Rolnick)!

Academic Service & Reviewing

- 2026.01: 📝 Reviewer for ECCV 2026 & CVPR 2026

- 2025.12: 📝 Mentor at AAAI Undergraduate Consortium 2026

- 2025.08: 📝 Reviewer for WACV 2026

- 2025.09: 📝 Reviewer for RegML Workshop (NeurIPS 2025)

- 2025.05: 📝 Reviewer for Actionable Interpretability Workshop (ICML 2025)

- 2025.04: 📝 Reviewer for ICCV 2025

- 2025.03: 📝 Reviewer for IJCNN 2025 & Advances in Financial AI Workshop (ICLR 2025-26)

- 2025.02: 📝 Reviewer for CVPR 2025

📝 Publications

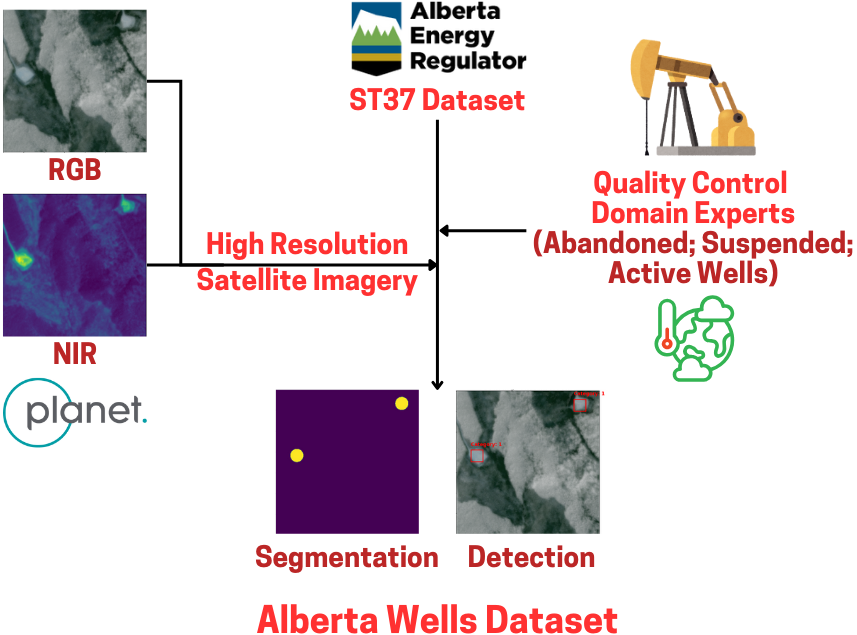

Alberta Wells Dataset: Pinpointing Oil and Gas Wells from Satellite Imagery

Pratinav Seth(#), Michelle Lin(#), Brefo Dwamena Yaw, Jade Boutot, Mary Kang, David Rolnick

Danush Khanna, Pratinav Seth, Sidhaarth Sredharan Murali, Aditya Kumar Guru, Siddharth Shukla, Tanuj Tyagi, SANDEEP CHAURASIA, Kripabandhu Ghosh

Interpretability as Alignment: Making Internal Understanding a Design Principle

Aadit Sengupta, Pratinav Seth, Vinay Kumar Sankarapu

Orion-MSP: Multi-Scale Sparse Attention for Tabular In-Context Learning

Mohamed Bouadi, Pratinav Seth, Aditya Tanna, Vinay Kumar Sankarapu

Orion-BiX: Bi-Axial Attention for Tabular In-Context Learning

Mohamed Bouadi, Pratinav Seth, Aditya Tanna, Vinay Kumar Sankarapu

Exploring Fine-Tuning for Tabular Foundation Models

Aditya Tanna, Pratinav Seth, Mohamed Bouadi, Vinay Kumar Sankarapu

TabTune: A Unified Library for Inference and Fine-Tuning Tabular Foundation Models (Demo)

Aditya Tanna, Pratinav Seth, Mohamed Bouadi, Utsav Avaiya, Vinay Kumar Sankarapu

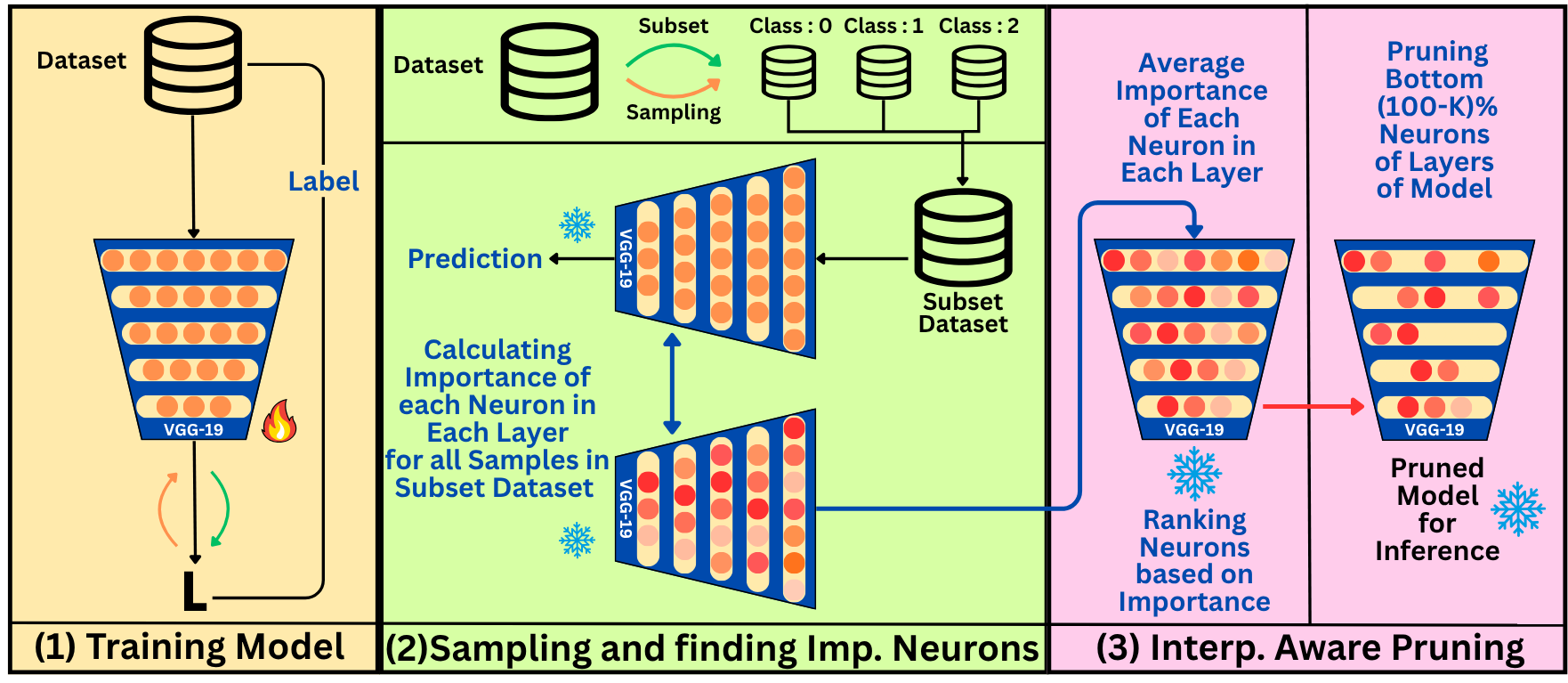

Interpretability-aware pruning for efficient medical image analysis

Nikita Malik, Pratinav Seth, Neeraj Kumar Singh, Chintan Chitroda, Vinay Kumar Sankarapu

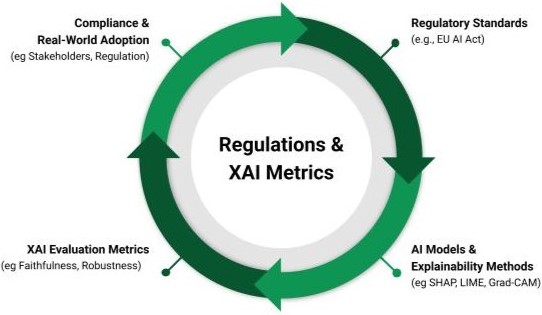

Bridging the gap in XAI-why reliable metrics matter for explainability and compliance

Pratinav Seth, Vinay Kumar Sankarapu

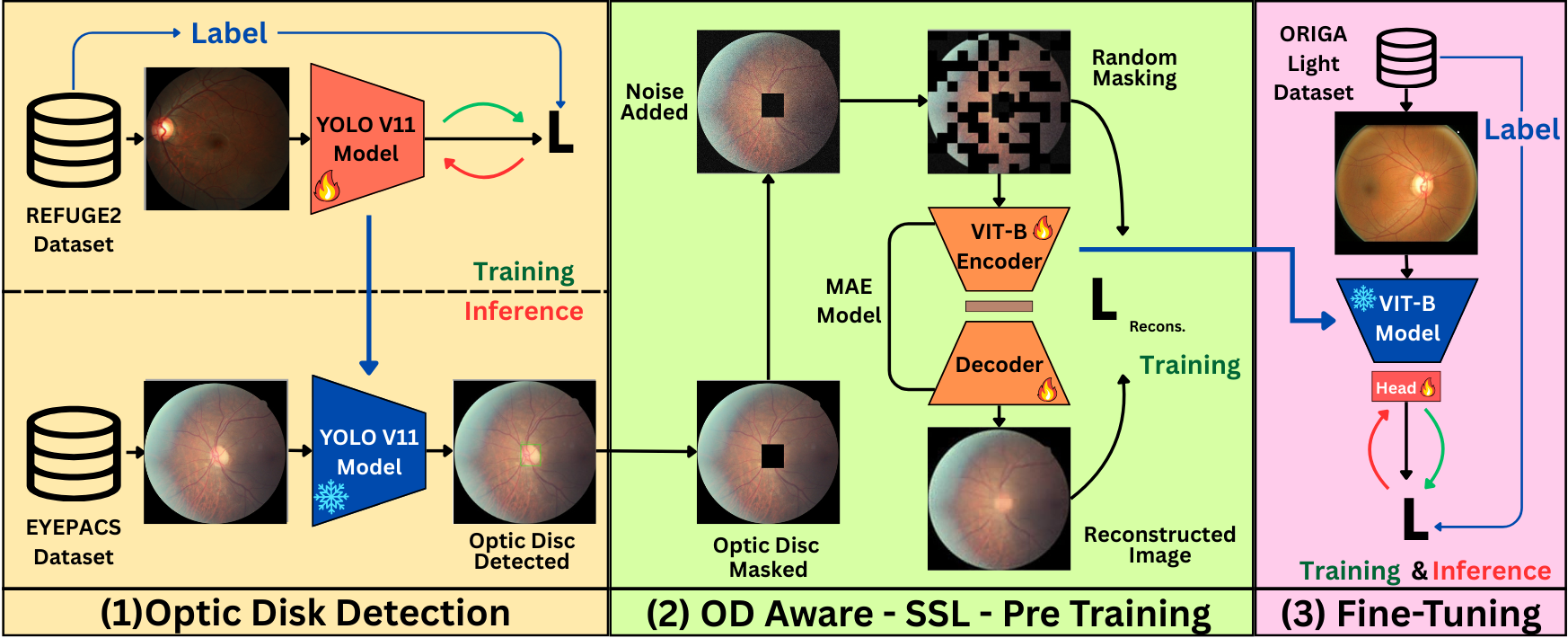

Obscure to Observe: A Lesion-Aware MAE for Glaucoma Detection from Retinal Context

Siddhant Bharadwaj, Pratinav Seth, Chandra Sekhar Seelamantula

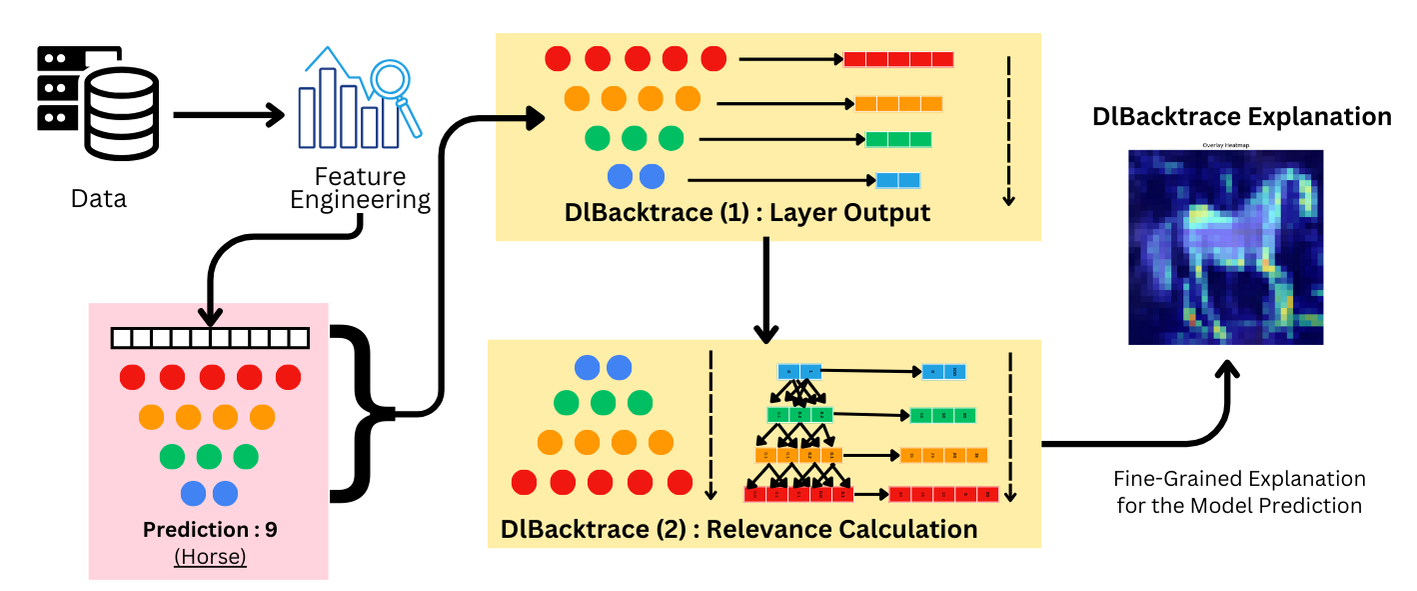

DLBacktrace: A Model Agnostic Explainability for any Deep Learning Models

Vinay Kumar Sankarapu, Chintan Chitroda, Yashwardhan Rathore, Neeraj Kumar Singh, Pratinav Seth

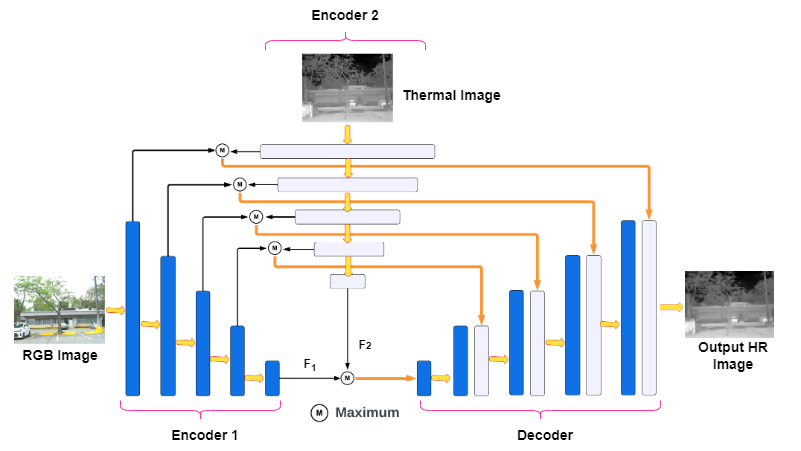

CoReFusion: Contrastive Regularized Fusion for Guided Thermal Super-Resolution

Aditya Kasliwal, Pratinav Seth, Sriya Rallabandi, Sanchit Singhal

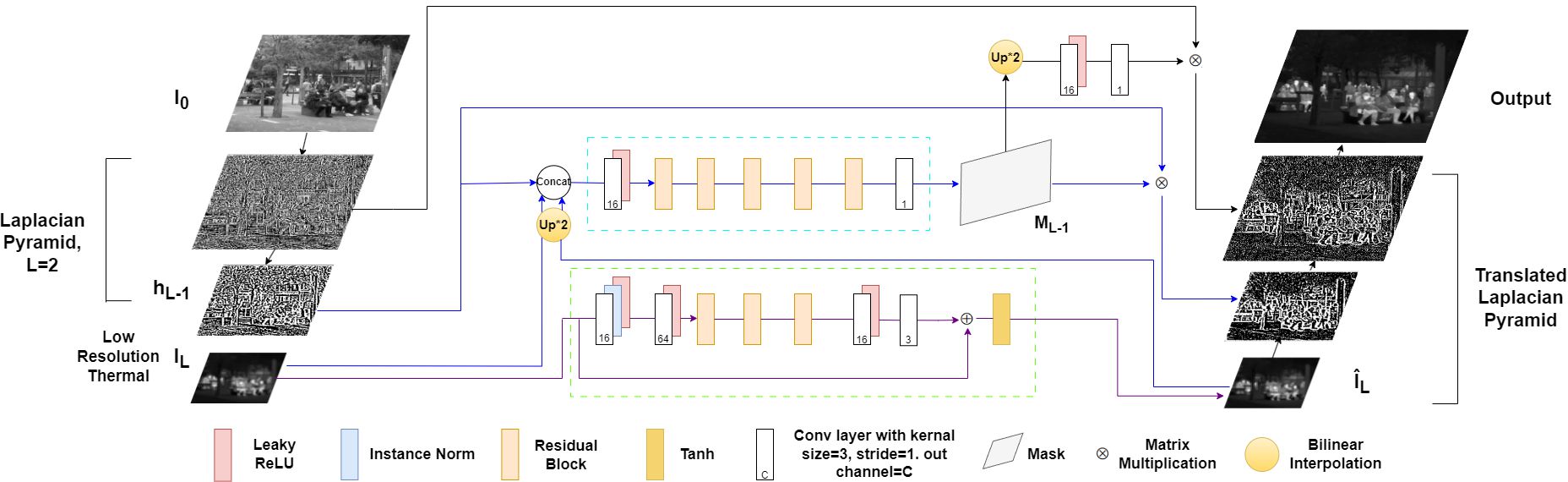

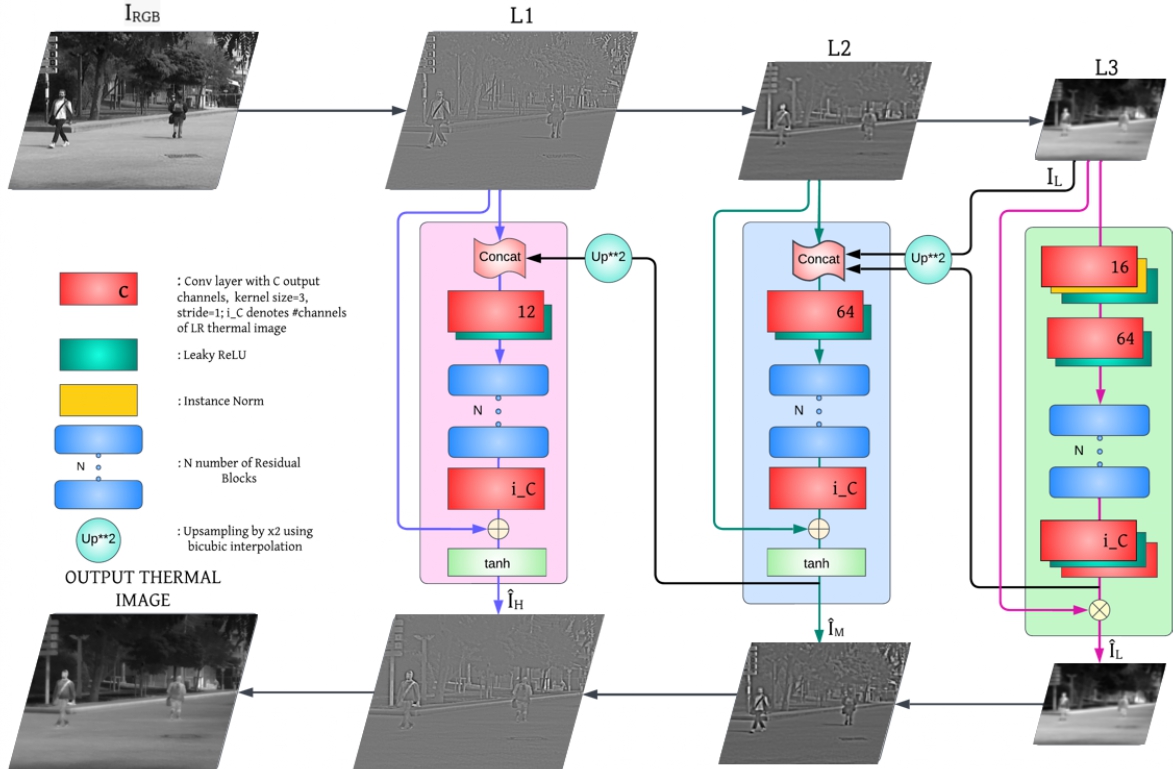

LaMAR: Laplacian Pyramid for Multimodal Adaptive Super Resolution (Student Abstract)

Aditya Kasliwal, Aryan Kamani, Ishaan Gakhar, Pratinav Seth, Sriya Rallabandi

Laplacian reconstructive network for guided thermal super-resolution

Aditya Kasliwal, Ishaan Gakhar, Aryan Kamani, Pratinav Seth, Ujjwal Verma

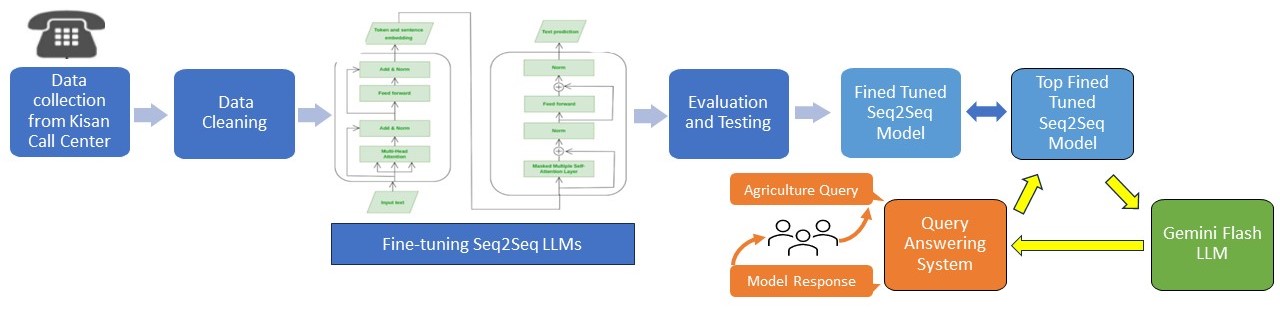

AgriLLM: Harnessing transformers for farmer queries.

Krish Didwania (†), Pratinav Seth (†), Aditya Kasliwal, Amit Agarwal

-

Performance Evaluation of Deep Segmentation Models for Contrails Detection, Akshat Bhandari, Sriya Rallabandi, Sanchit Singhal, Aditya Kasliwal, Pratinav Seth, Tackling Climate Change with Machine Learning Workshop at NeurIPS 2022. Citations

-

Sailing Through Spectra: Unveiling the Potential of Multi-Spectral Information in Marine Debris Segmentation, Dyutit Mohanty, Aditya Kasliwal, Bharath Udupa, Pratinav Seth, The Second Tiny Papers Track at ICLR 2024. Citations

-

ReFuSeg: Regularized Multi-Modal Fusion for Precise Brain Tumour Segmentation, Aditya Kasliwal, Sankarshanaa Sagaram, Laven Srivastava, Pratinav Seth, Adil Khan, 9th Edition of the Brain Lesion (BrainLes) workshop, MICCAI 2023. Citations

-

UATTA-ENS: Uncertainty Aware Test Time Augmented Ensemble for PIRC Diabetic Retinopathy Detection, Pratinav Seth, Adil Khan, Ananya Gupta, Saurabh Kumar Mishra, Akshat Bhandhari, Medical Imaging meets NeurIPS Workshop, NeurIPS 2022. Citations

-

UATTA-EB: Uncertainty-Aware Test-Time Augmented Ensemble of BERTs for Classifying Common Mental Illnesses on Social Media Posts, Pratinav Seth (†), Mihir Agarwal (†), 1st Tiny Paper Track at ICLR 2023. Citations

-

Evaluating Predictive Uncertainty and Robustness to Distributional Shift Using Real World Data, Kumud Lakara (†), Akshat Bhandari (†), Pratinav Seth (†), Ujjwal Verma, Bayesian Deep Learning Workshop, NeurIPS 2021. Citations

-

SSS at SemEval-2023 Task 10: Explainable Detection of Online Sexism using Majority Voted Fine-Tuned Transformers, Sriya Rallabandi, Sanchit Singhal, Pratinav Seth, Proceedings of the 17th International Workshop on Semantic Evaluation (SemEval-2023), ACL 2023 Citations

-

RSM-NLP at BLP-2023 Task 2: Bangla Sentiment Analysis using Weighted and Majority Voted Fine-Tuned Transformers,Pratinav Seth, Rashi Goel, Komal Mathur, Swetha Vemulapalli, Proceedings of the 1st Workshop on Bangla Language Processing (BLP 2023), EMNLP 2023 Citations

-

HGP-NLP at Shared Task: Leveraging LoRA for Lay Summarization of Biomedical Research Articles using Seq2Seq Transformers,Hemang Malik,Gaurav Pradeep,Pratinav Seth, Accepted at BioNLP 2024 Workshop, ACL 2024. Citations

-

Analyzing Effects of Fake Training Data on the Performance of Deep Learning Systems,Pratinav Seth (†), Akshat Bhandari (†), Kumud Lakara (†), Pre-Print Citations

-

xai_evals: A Framework for Evaluating Post-Hoc Local Explanation Methods,Pratinav Seth, Yashwardhan Rathore, Neeraj Kumar Singh, Chintan Chitroda, Vinay Kumar Sankarapu, Technical Report Citations

🎓 Academic Service

- Mentor: AAAI Undergraduate Consortium 2026

Conference Reviewing & Program Committee

- Main Conference Reviewer: CVPR (2025-26), ECCV (2024, 2026), ICCV 2025, WACV 2026, IJCNN 2025, AAAI 2026

- Workshop Reviewer:

- NLP for Positive Impact Workshop (EMNLP 2024)

- SyntheticData4ML Workshop (NeurIPS 2022, 2023)

- Bayesian Decision-making and Uncertainty Workshop (NeurIPS 2024)

- Frontiers in Probabilistic Inference (ICLR 2025)

- Topological, Algebraic, and Geometric P.R.A. Workshop (CVPR 2023)

- Domain Adaptation and Representation Transfer Workshop (MICCAI 2023)

- FAIMI Workshop (MICCAI 2024)

- Advances in Financial AI Workshop (ICLR 2025-26)

- Actionable Interpretability Workshop (ICML 2025)

- RegML Workshop (NeurIPS 2025)

💻 Professional Experience

Research Positions

- 2025.07 - Present, Research Scientist at Lexsi Labs, Remote

- Tabular Foundation Models: Contributed to the development of foundation models for tabular data in high-stakes domains; co-developed a library for inference, fine-tuning, and benchmarking of tabular foundation models

- Interpretability-Guided Alignment: Investigating model optimization (pruning, quantization) and alignment (fine-tuning, RL-based alignment, unlearning) strategies across various model architectures—leveraging interpretability as a design principle and guiding mechanism

- Research & POCs: Led proof-of-concept (POC) projects for model optimization, fine-tuning, alignment, and internal research tooling to accelerate experimental workflows

- Research & Mentorship: Overseeing 4 full-time researchers; mentored six research interns; oversaw recruitment of interns and full-time scientists (Paris and India); authored technical and research documentation for stakeholders; initiated proof-of-concept projects to advance internal algorithmic capabilities

- Representation: Presented a spotlight talk at NeurIPS 2025; presented a poster at the MICCAI Workshop 2025

- Publications:

- Interpretability-Aware Pruning for Efficient Medical Image Analysis. 2025. MICCAI Workshop 2025 (LNCS).

- Interpretability as Alignment: Making Internal Understanding a Design Principle. 2025. Position Paper (Accepted at EurIPS Workshop on Private AI Governance).

- TabTune: A Unified Library for Inference and Fine-Tuning Tabular Foundation Models. 2026. Accepted at WWW 2026.

- Orion-MSP: Multi-Scale Sparse Attention for Tabular In-Context Learning. 2025. Pre-print.

- Orion-BiX: Bi-Axial Attention for Tabular In-Context Learning. 2026. Accepted at WWW 2026.

- Exploring Fine-Tuning for Tabular Foundation Models. 2026. Accepted at WWW 2026.

- 2024.07 - 2025.06, Research Scientist at AryaXAI Alignment Labs, Remote / Mumbai, India

- Research Focus: Working at the intersection of Explainable AI (XAI), AI alignment, and AI safety in high-stakes domains—interpreting black-box models, assessing XAI reliability, and developing foundation models for tabular data in fraud detection and mission-critical applications

- Explainability: Enhanced the DL-Backtrace method by generalizing its mechanics for model-agnostic use; co-developed a benchmarking framework for the systematic evaluation of XAI techniques

- XAI-Guided Optimization & Alignment: Investigating model-agnostic post-hoc optimization and alignment strategies across various model architectures—leveraging interpretability for safer, more reliable model behavior

- Leadership & Mentorship: Mentored two research interns; led recruitment of interns and full-time scientists (Paris and India); authored technical and research documentation for stakeholders; initiated proof-of-concept (POC) projects to advance internal algorithmic capabilities

- Representation: Served as R&D representative in client-facing engagements and presented AryaXAI solutions at industry forums, including the 5th MLOps Conference

- Publications:

- DL-Backtrace: A Model-Agnostic Explainability Method for Deep Learning Models. Accepted at IJCNN 2025.

- XAI Evals: A Framework for Evaluating Post-Hoc Local Explanation Methods. Technical Report, 2025.

- Bridging the Gap in XAI: Why Reliable Metrics Matter for Explainability and Compliance. Accepted at EurIPS Workshop on Private AI Governance, 2025.

- 2024.01 - 2024.06, Research Intern at Rolnick Lab, Mila Quebec AI Institute, Remote

- Project: Computer vision and deep learning for geospatial applications targeting climate change

- Focus: Detecting abandoned oil and gas wells from satellite imagery; created new geospatial dataset and benchmarked deep learning models

- Mentor: Dr. David Rolnick (McGill University, Université de Montréal, Mila)

- Outcome: Led to ICML 2025 publication on Alberta Wells Dataset

- 2023.06 - 2023.10, Computer Vision Research Intern at Robert Bosch Research and Technology Center India, Bangalore

- Project: Vision-based generative AI for autonomous driving using Latent Diffusion Models

- Focus: Generating additional data for difficult or misclassified samples to improve downstream task network optimization

- Mentors: Mr. Koustav Mullick (CR/RDT-2), Dr. Amit Kale

- 2021.03 - 2024.01, Research Progression at Mars Rover Manipal

- Advanced from Trainee to Senior Researcher and Mentor

- Led AI research initiatives leading to multiple publications at NeurIPS, ACL, AAAI, CVPR, etc withprojects in Generative AI, Medical Image Analysis, and Climate Change.

- Built a team of 10+ members and mentored them in their research.

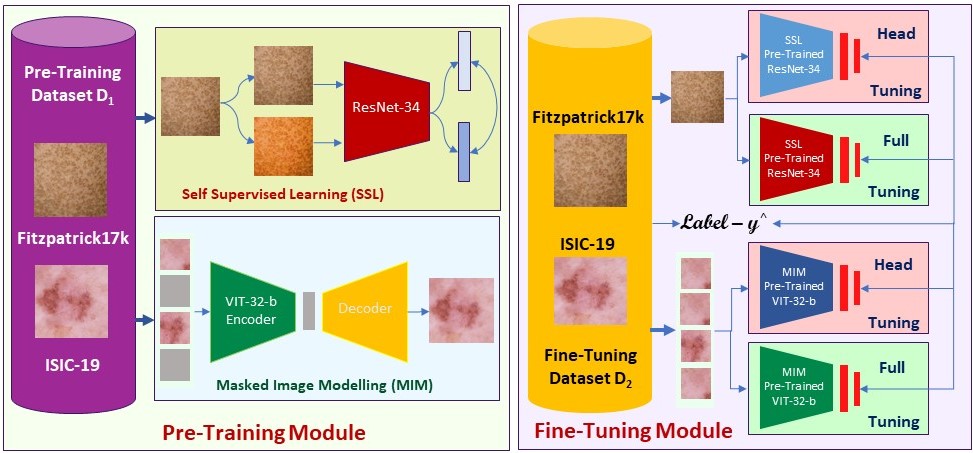

- 2023.04 - 2023.12, Research Assistant under Dr. Abhilash K. Pai, Dept. of DSCA, MIT MAHE

- Focused on medical image analysis and fairness in AI.

- Worked on a study on effects of pretraining techniques on skin tone bias in skin lesion classification with support from MAHE Undergraduate Research Grant leading to a publication at Pre-Train Workshop at WACV 2024.

Research Collaborations

- 2023.12 - 2024.01, Research Collaboration with Dr. Amit Agarwal, Wells Fargo AI COE

- 2022.05 - 2023.12, Research Intern at KLIV Lab, IIT Kharagpur under Dr. Debdoot Sheet and mentored by Mr. Rakshith Satish. Worked on integrating domain knowledge in medical image analysis using Graph Convolutional Networks and Explainable AI for chest radiographs.

- 2022.10 - 2023.03, Research Collaboration with IIT Roorkee.

Leadership Roles

- 2022.09 - 2023.09, Co-President & AI Research Mentor, Research Society MIT Manipal

- 2022.11 - 2023.08, Co-founder & Head of AI, The Data Alchemists

Early Career Experience

- 2022.06 - 2022.09, Research Assistant, Dept. of DSCA, MIT MAHE under Dr. Vidya Rao & Dr. Poornima P.K. working on International Cyber Security Data Mining Competition leading to a position of 5th out of 134+ teams.

- 2022.03 - 2022.05, Machine Learning Intern, Eedge.ai

- 2022.01 - 2022.02, Data Science (NLP) Intern, CUREYA

🎖 Honors and Awards

- 2023.02 One of the 11 Undergraduates Selected as an AAAI Undergraduate Consortium Scholar 2023. Included a Travel Grant of $2000 to present at AAAI-23 at Washington DC, USA.

- 2023.01 Received MAHE Undergraduate Research Grant Worth 10K INR for Project : Explainable & Trustworthy Skin Lesion Classification under Dr. Abhilash K. Pai, Dept. of DSCA, Manipal Institute of Technology, MAHE.

- 2022.06 Top 10 Team out of 1000+ submissions in Bajaj Finserv HackRx3.0 Hackathon.

📖 Education

- 2020.10 - 2024.07, Bachelors of Technology in Data Science & Engineering (B.Tech), Manipal Academy of Higher Education, Manipal, Karnataka, India.

- CGPA: 8.31/10

🛠️ Technical Skills

🧠 Machine Learning & AI

- Frameworks: PyTorch, TensorFlow, Keras, Scikit-Learn, HuggingFace, NetworkX

- Specialized: LLM Alignment (Fine-tuning, RLHF, DPO), Tabular Foundation Models, Model Optimization (Pruning, Quantization), Interpretability (XAI), Uncertainty Quantification, CUDA Programming, Distributed Training, Mixed Precision

- Libraries: NumPy, Pandas, Seaborn, Matplotlib, OpenCV, PIL, NLTK, SpaCy, GeoPandas, Shapely

💻 Programming & Tools

- Languages: Python, C++, SQL, Java, C, LaTeX, HTML/CSS

- Development: Git, Linux/Bash, HPC (SLURM), Docker, Jupyter, Google Colab

- Platforms: AWS, GCP, Hugging Face Hub, Weights & Biases

🏅 Certifications

- Deep Learning Specialization - DeepLearning.ai

- 6th Summer School on AI - CVIT IIITH

💬 Invited Talks & Presentations

2024-2025

- NeurIPS 2025 Spotlight Talk: “Scientific Impact” at NeurIPS 2025 (December 2025)

- AryaXAI Alignment Lab Webinars:

- “Inside the Black Box: Interpreting LLMs with DL-Backtrace (DLB)”

- “Beyond Explainability – Evaluating XAI Methods with Confidence Using xai evals”

- “Interpretability Aware Pruning in Medical Imagery” (Paper Podcast)

- 2024.03, Introduction to Research, at ACM-W Manipal Chapter.

- 2024.02, Data Dialogue invited by The Data Alchemists, The Official Data Science Club of MIT Manipal. | [link]

2023

- 2023.03, Research as Undergrad, at ACM-W Manipal Chapter.